Creative Writing Dataset with Thought Processes: Unleashing Human-like Creativity in AI

Have you ever read AI-generated text and felt that something was off? Perhaps it lacked personality, had poor logical flow, or provided only a superficial analysis. We've all been there. That's why we are thrilled to introduce M-A-P and 2077AI's latest open-source work: A High-Quality Chinese Creative Writing with Thought Process Dataset. This revolutionary project is designed to help language models move beyond a generic "sense of AI" and truly capture the depth and nuance of human creativity.

What is COIG-Writer?

This unique dataset offers a rich collection of high-quality Chinese creative writing and other text types, such as scientific popularization articles. Here's what sets it apart: each piece is paired with a detailed "query" (prompt) and a meticulously articulated "thought" (the thinking process).

Our primary goal is to address common shortcomings of machine-generated text, such as:

- Logical inconsistencies: Ideas don't connect or flow naturally.

- Lack of distinct personality: Text that feels bland or generic.

- Superficial analysis: Content that skims the surface rather than offering deep insights.

- Overly elaborate language or weak narrative development: Style overshadows substance, and stories fall flat.

Our goal is to train language models to produce content that is fluent and demonstrates deeper coherence, individuality, insightful perspectives, and sophisticated narrative construction. This content should closely mirror human-authored compositions. To achieve this, we provide explicit thought processes alongside the finished product.

The dataset spans approximately 50 subfields of Chinese creative writing and other text generation tasks, all in Simplified Chinese (zh-CN).

Diving into a Data Instance

Each entry in the dataset is structured to provide a comprehensive view of the creative writing process.

- query_type: Categorizes the writing piece (e.g., "Poetry," "Essay," "Fiction/Story," "Scientific Article").

- query: A carefully crafted prompt that often resembles challenging college entrance exam (Gaokao)) essay questions or specific content creation requests. These are designed to be explicit, creative, and detailed, guiding everything from topic and style to elements to include and desired atmosphere.

- thought: This is where the magic happens! It's a metacognitive description of the writing process that outlines the structural plan, author's intent, key compositional elements, stylistic choices, and self-correction steps. Think of it as an internal monologue or a detailed execution plan connecting the query to the answer.

- answer: The high-quality Chinese text itself is carefully selected or crafted to fulfill the query and align with the thought.

- link: The original source URL for the answer.

- score : A multi-line string containing various quality and creativity scores assigned during the annotation process, including a total score and individual scores for the answer, query, and thought.

This meticulous structure addresses the critical absence of high-quality, human-authored texts paired with explicit, deconstructed thought processes. Our goal is to empower models to develop more profound generative capabilities in Chinese and move beyond mere imitation.

How Was This Dataset Created?

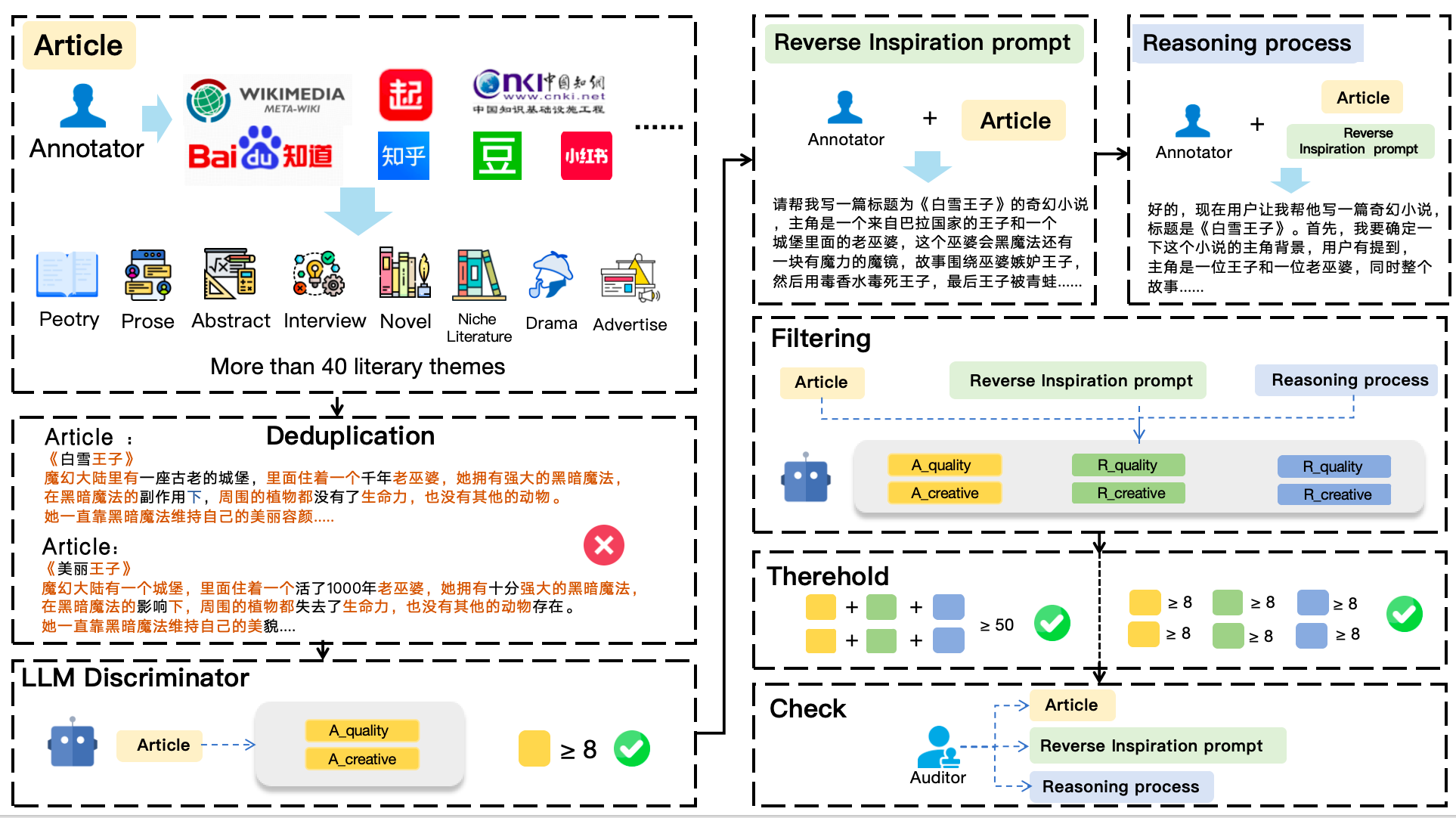

The creation of this dataset was a rigorous, multi-stage process, heavily leveraging a human-in-the-loop approach where Large Language Models (LLMs) assist, but human expertise provides critical evaluation and refinement.

Here's a simplified breakdown:

- Answer Selection: Human annotators first selected high-quality "Answer" texts from reputable online platforms, ensuring they met strict criteria for publication date, quality indicators (like high engagement), and content integrity. LLMs provided an initial quality and creativity assessment.

- Collaborative Query and Thought Generation (Human-AI Interaction): This is the heart of the process.

- An LLM generated an initial Query based on the Answer. Human annotators then meticulously refined this Query, eliminating "hallucinations," ensuring clarity, and enhancing its specificity.

- Next, an LLM (often with deep thinking capabilities) generated an initial Thought process. Human annotators critically reviewed and modified this Thought, ensuring it was insightful, coherent, and truly reflected a strong creative or analytical process. The focus was on "what to achieve, how to do it, and providing relevant examples/reasoning," as if the Answer was yet to be written.

- Scoring and Iteration: Human annotators scored the Answer, refined Query, and refined Thought for quality and creativity. If any component fell below a specified threshold, it was sent back for further refinement.

- Final Quality Assurance: A dedicated Quality Inspector performed a final verification of the entire data instance before inclusion in the dataset.

This iterative process, with its multiple checkpoints, ensures that the Query and Thought components genuinely reflect a high-caliber creative and conceptual pathway to the Answer.

Applications

This dataset is designed to accelerate the development of AI tools that can more effectively assist with and enhance human creativity and analytical writing in Chinese. Potential applications include:

- Educational tools for writing and literary/content analysis.

- Advanced writing assistants capable of suggesting structure, style, and content, going beyond basic grammar checks.

- Systems for enriched content generation that exhibit greater depth, personality, and logical coherence.

- Training models to better understand and replicate complex thought processes for task execution.

Portions of this dataset are already publicly available, and we encourage everyone to stay tuned for more developments in creative writing research!

>